tensorflow - Why my inception and LSTM model with 2M parameters take 1G GPU memory? - Stack Overflow

CUDNNError: CUDNN_STATUS_BAD_PARAM (code 3) while training lstm neural network on GPU · Issue #1360 · FluxML/Flux.jl · GitHub

DeepBench Inference: RNN & Sparse GEMM - The NVIDIA Titan V Deep Learning Deep Dive: It's All About The Tensor Cores

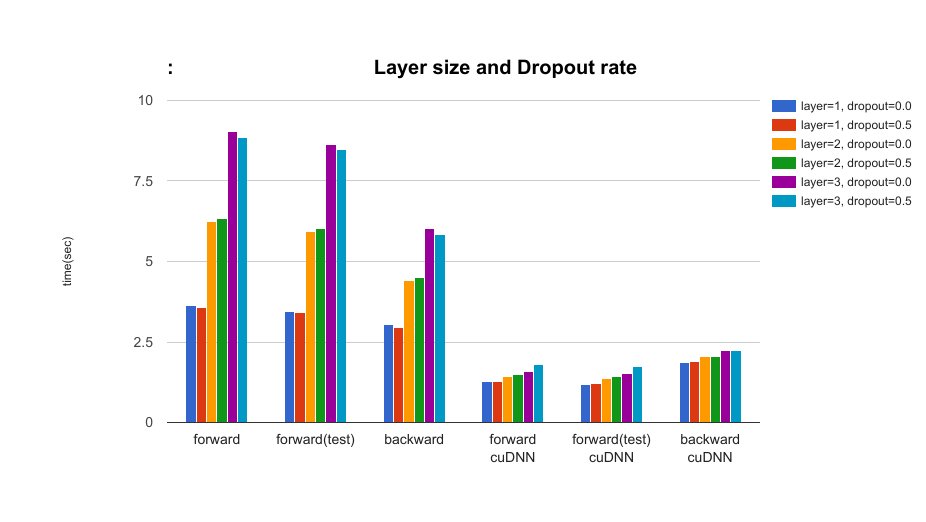

Comparing the relative efficiency of running the LSTM model on the GPU... | Download Scientific Diagram

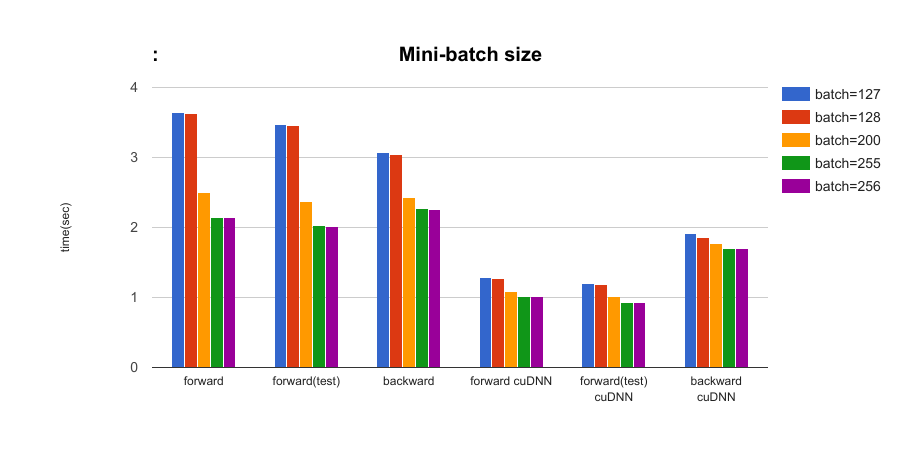

Comparing the relative efficiency of running the LSTM model on the GPU... | Download Scientific Diagram

python - Unexplained excessive memory allocation on TensorFlow GPU (bi-LSTM and CRF) - Stack Overflow